Research at MSP

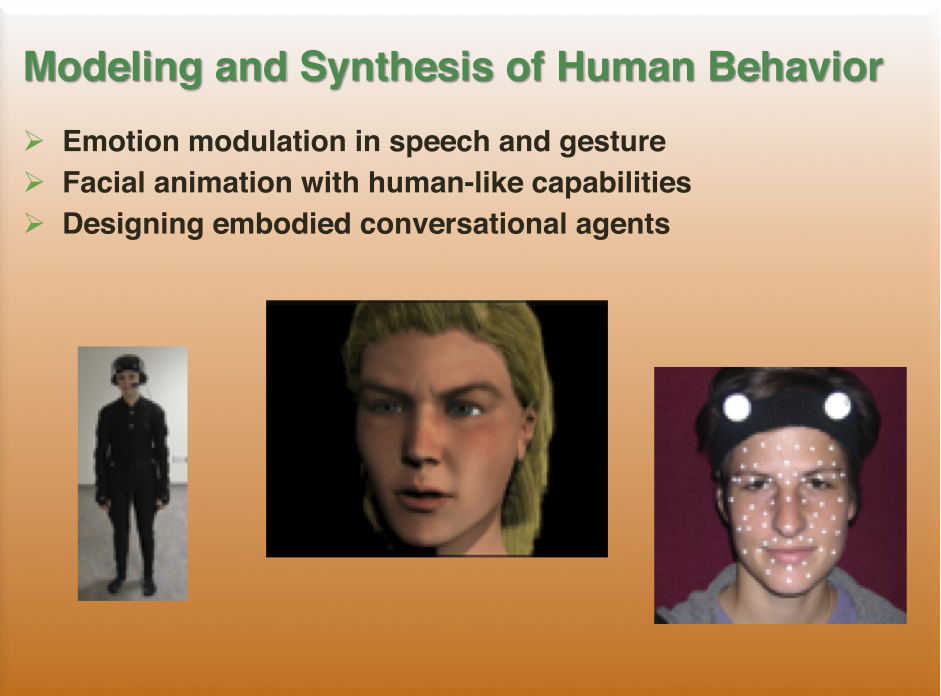

Modeling and synthesis of human behavior

The verbal and non-verbal channels of human communication are internally and intricately connected. As a result, gestures and speech present high levels of correlation and coordination. This relationship is greatly affected by the linguistic and emotional content of the message being communicated. As a result, the emotional modulation observed in different communicative channel is not uniformly distributed. In the face, the upper region presents more degrees of freedom to convey non-verbal information than in the lower region, which is highly constrained by the underlying articulatory processes. The same type of interplay is observed in various aspects of speech. In the spectral domain, some broad phonetic classes, such as front vowels, have stronger emotional variability than other phonetic classes (e.g., nasal sounds), suggesting that for some phonemes the vocal tract features do not have enough degrees of freedom to convey the affective goals. Likewise, gross statistics from the fundamental frequency such as the mean, maximum, minimum and range are more emotionally prominent than the features describing the F0 shape, which are hypostasized to be closely related with the lexical content. Interestingly, a joint analysis reveals that when one modality is constrained by the articulatory speech process, other channels with more degrees of freedom are used to convey the emotions. For example, facial expression and prosodic speech tend to have a stronger emotional modulation when the vocal tract is physically constrained by the articulation to convey other linguistic communicative goals.

|

|

As a result of the analysis and modeling, we present applications in recognition and synthesis of expressive communication.

Selected Publications:

- Najmeh Sadoughi and Carlos Busso,"Head motion generation," in Handbook of Human Motion, B. Muller, S.I. Wolf, G.-P. Brueggemann, Z. Deng, A. McIntosh, F. Miller, and W. Scott Selbie, Eds., pp. 1-25. Springer International Publishing, January 2017. [link-to-pdf] [soon cited] [bib]

- Najmeh Sadoughi, Yang Liu, and Carlos Busso, "Meaningful head movements driven by emotional synthetic speech," Speech Communication, vol. 95, pp. 87-99, December 2017. [pdf] [cited] [bib]

- Najmeh Sadoughi and Carlos Busso, "Expressive speech-driven lip movements with multitask learning," in IEEE Conference on Automatic Face and Gesture Recognition (FG 2018), Xi'an, China, July 2018. [pdf soon][soon cited] [bib]

- Najmeh Sadoughi and Carlos Busso, "Joint learning of speech-driven facial motion with bidirectional long-short term memory," International Conference on Intelligent Virtual Agents (IVA 2017), J. Beskow, C. Peters, G. Castellano, C. O'Sullivan, I. Leite, S. Kopp, Eds., vol. 10498 of Lecture Notes in Computer Science, pp. 389-402. Springer Berlin Heidelberg, Stockholm, Sweden, August 2017. [pdf] [cited] [bib] [slides]

- Najmeh Sadoughi and Carlos Busso, "Head motion generation with synthetic

speech: a data driven approach,"

in Interspeech 2016, San Francisco, CA, USA, September 2016, pp. 52-56.

[pdf]

[cited]

[bib]

[slides]

Nominated for Best Student Paper at Interspeech 2016! - Najmeh Sadoughi and Carlos Busso, "Retrieving target gestures toward speech driven animation with meaningful behaviors," in International conference on Multimodal interaction (ICMI 2015), Seattle, WA, USA, November 2015, pp. 115-122. [pdf] [cited] [bib] [slides]

- Najmeh Sadoughi and Carlos Busso, "Novel realizations of speech-driven head movements with generative adversarial networks," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2018), Calgary, AB, Canada, April 2018. [pdf soon][soon cited] [pdf] [bib]

- Najmeh Sadoughi, Yang Liu, and Carlos Busso, "MSP-AVATAR corpus: Motion capture recordings to study the role of discourse functions in the design of intelligent virtual agents," in 1st International Workshop on Understanding Human Activities through 3D Sensors (UHA3DS 2015), Ljubljana, Slovenia, May 2015. [pdf] [cited] [bib] [slides]

- Soroosh Mariooryad and Carlos Busso, "Generating human-like behaviors using joint, speech-driven models for conversational agents," IEEE Transactions on Audio, Speech and Language Processing, vol. 20, no. 8, pp. 2329-2340, October 2012. [pdf] [cited] [bib]

- Carlos Busso, Murtaza Bulut, Chi-Chun Lee, Abe Kazemzadeh, Emily Mower, Samuel Kim, Jeannette Chang, Sungbok Lee, and Shrikanth S. Narayanan, "IEMOCAP: Interactive emotional dyadic motion capture database," Journal of Language Resources and Evaluation, vol. 42, no. 4, pp. 335-359, December 2008. [pdf] [cited] [bib]

- Carlos Busso and Shrikanth S. Narayanan, "Interrelation between speech and facial gestures in emotional utterances: a single subject study," IEEE Transactions on Audio, Speech and Language Processing, vol. 15, no. 8, pp. 2331-2347, November 2007. [pdf] [cited] [bib]

- Carlos Busso, Zhigang Deng, Michael Grimm, Ulrich Neumann, and Shrikanth S. Narayanan, "Rigid head motion in expressive speech animation: Analysis and synthesis," IEEE Transactions on Audio, Speech and Language Processing, vol. 15, no. 3, pp. 1075-1086, March 2007. [pdf] [cited] [bib]

- Carlos Busso, Zhigang Deng, Ulrich Neumann, and Shrikanth S. Narayanan, "Natural head motion synthesis driven by acoustic prosodic features," Computer Animation and Virtual Worlds, vol. 16, no. 3-4, pp. 283-290, July 2005. [pdf] [cited] [bib] [slides]

|

|

|

|