Human-Centered Research Lab

Research at MSP

Affective state recognition

Emotion plays a crucial role in day-to-day interpersonal human interactions. Recent findings have suggested that emotion is integral to our rational and intelligent decisions. It helps us to relate with each other by expressing our feelings and providing feedback. This important aspect of human interaction needs to be considered in the design of human-machine interfaces (HMIs). To build interfaces that are more in tune with the users' needs and preferences, it is essential to study how emotion modulates and enhances the verbal and nonverbal channels in human communication.

|

|

Selected Publications:

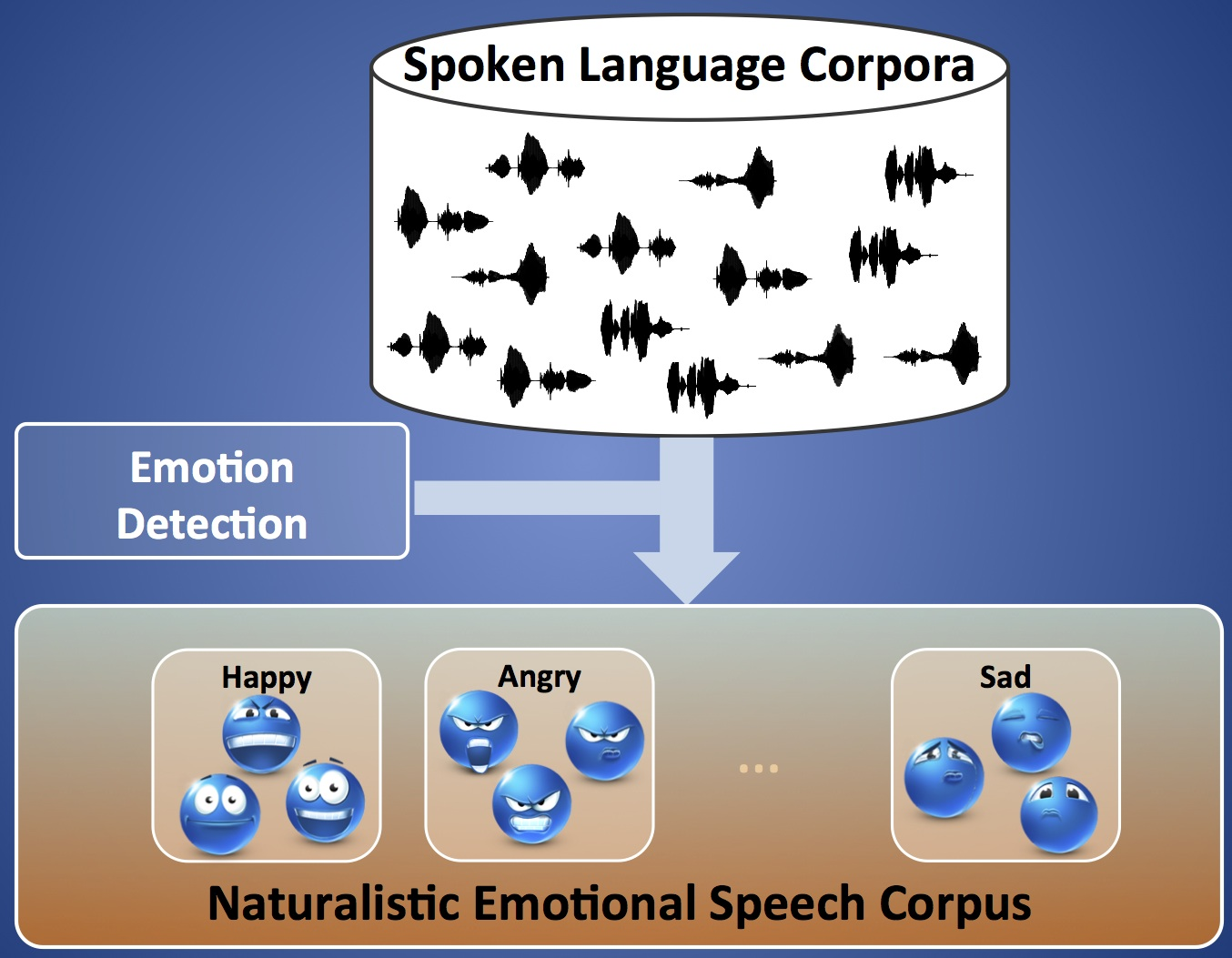

- Reza Lotfian and Carlos Busso, "Building naturalistic emotionally balanced speech corpus by retrieving emotional speech from existing podcast recordings," IEEE Transactions on Affective Computing, vol. To appear, 2018. [pdf] [cited] [bib]

- Carlos Busso, Srinivas Parthasarathy, Alec Burmania, Mohammed AbdelWahab, Najmeh Sadoughi, and Emily Mower Provost, "MSP-IMPROV: An acted corpus of dyadic interactions to study emotion perception," IEEE Transactions on Affective Computing, vol. 8, no. 1, pp. 119-130 January-March 2017. [pdf] [cited] [bib]

- Srinivas Parthasarathy, Roddy Cowie, and Carlos Busso, "Using agreement on direction of change to build rank-based emotion classifiers,," IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 24, no. 11, pp. 2108-2121, November 2016. [pdf] [cited] [bib]

- Reza Lotfian and Carlos Busso, "Formulating emotion perception as a probabilistic model with application to categorical emotion classification," in International Conference on Affective Computing and Intelligent Interaction (ACII 2017), San Antonio, TX, USA, October 2017, pp. 415-420. [soon cited] [pdf] [bib] [slides]

- Srinivas Parthasarathy and Carlos Busso, "Jointly predicting

arousal, valence and dominance with multi-task learning,"

in Interspeech 2017, Stockholm, Sweden, August 2017, pp. 1103-1107.

[pdf]

[cited]

[bib]

[slides]

Nominated for Best Student Paper at Interspeech 2017! - Mohammed Abdelwahab and Carlos Busso, "Incremental adaptation using active learning for acoustic emotion recognition," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2017), New Orleans, LA, USA, March 2017, pp. 5160-5164. [pdf] [cited] [bib] [poster]

- Srinivas Parthasarathy and Reza Lotfian and Carlos Busso, "Ranking emotional attributes with deep neural networks," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2017), New Orleans, LA, USA, March 2017, pp. 4995-4999. [pdf] [cited] [bib] [slides]

- Mohammed Abdelwahab and Carlos Busso, "Ensemble feature selection for domain adaptation in speech emotion recognition," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2017), New Orleans, LA, USA, March 2017, pp. 5000-5004. [pdf] [cited] [bib] [slides]

- Alec Burmania, Srinivas Parthasarathy, and Carlos Busso, "Increasing the reliability of crowdsourcing evaluations using online quality assessment," IEEE Transactions on Affective Computing, vol. 7, no. 4, pp. 374-388, October-December 2016. [pdf] [cited] [bib]

- Soroosh Mariooryad and Carlos Busso, "Facial expression recognition in the presence of speech using blind lexical compensation," IEEE Transactions on Affective Computing, vol. 7, no. 4, pp. 346-359, October-December 2016. [pdf] [cited] [bib]

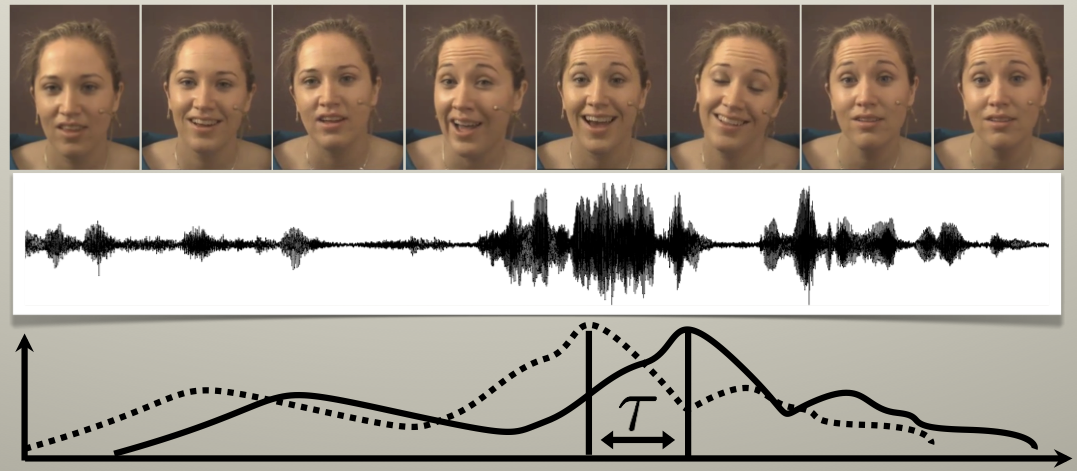

- Soroosh Mariooryad and Carlos Busso, "Correcting time-continuous emotional labels by modeling the reaction lag of evaluators," IEEE Transactions on Affective Computing, vol. 6, no. 2, pp. 97-108, April-June 2015. [pdf] [cited] [bib] Special Issue Best of ACII

- Soroosh Mariooryad and Carlos Busso, "Compensating for speaker or lexical variabilities in speech for emotion recognition," Speech Communication, vol. 57, pp. 1-12, February 2014. [pdf] [cited] [bib]

- Juan Pablo Arias, Carlos Busso, and Nestor Becerra Yoma, "Shape-based modeling of the fundamental frequency contour for emotion detection in speech," Computer Speech and Language, vol. 28, no. 1, pp. 278-294, January 2014. [pdf] [cited] [bib]

- Carlos Busso, Soroosh Mariooryad, Angeliki Metallinou, and Shrikanth S. Narayanan, "Iterative feature normalization scheme for automatic emotion detection from speech," IEEE Transactions on Affective Computing, vol. 4, no. 4, pp. 386-397, October-December 2013. [pdf] [cited] [bib]

- Soroosh Mariooryad and Carlos Busso, "Exploring cross-modality affective reactions for audiovisual emotion recognition," IEEE Transactions on Affective Computing, vol. 4, no. 2, pp. 183-196, April-June 2013. [pdf] [cited] [bib]

|

|

|

|

(c) Copyrights. All rights reserved.