MSP-Face corpus:

A natural audiovisual emotional database

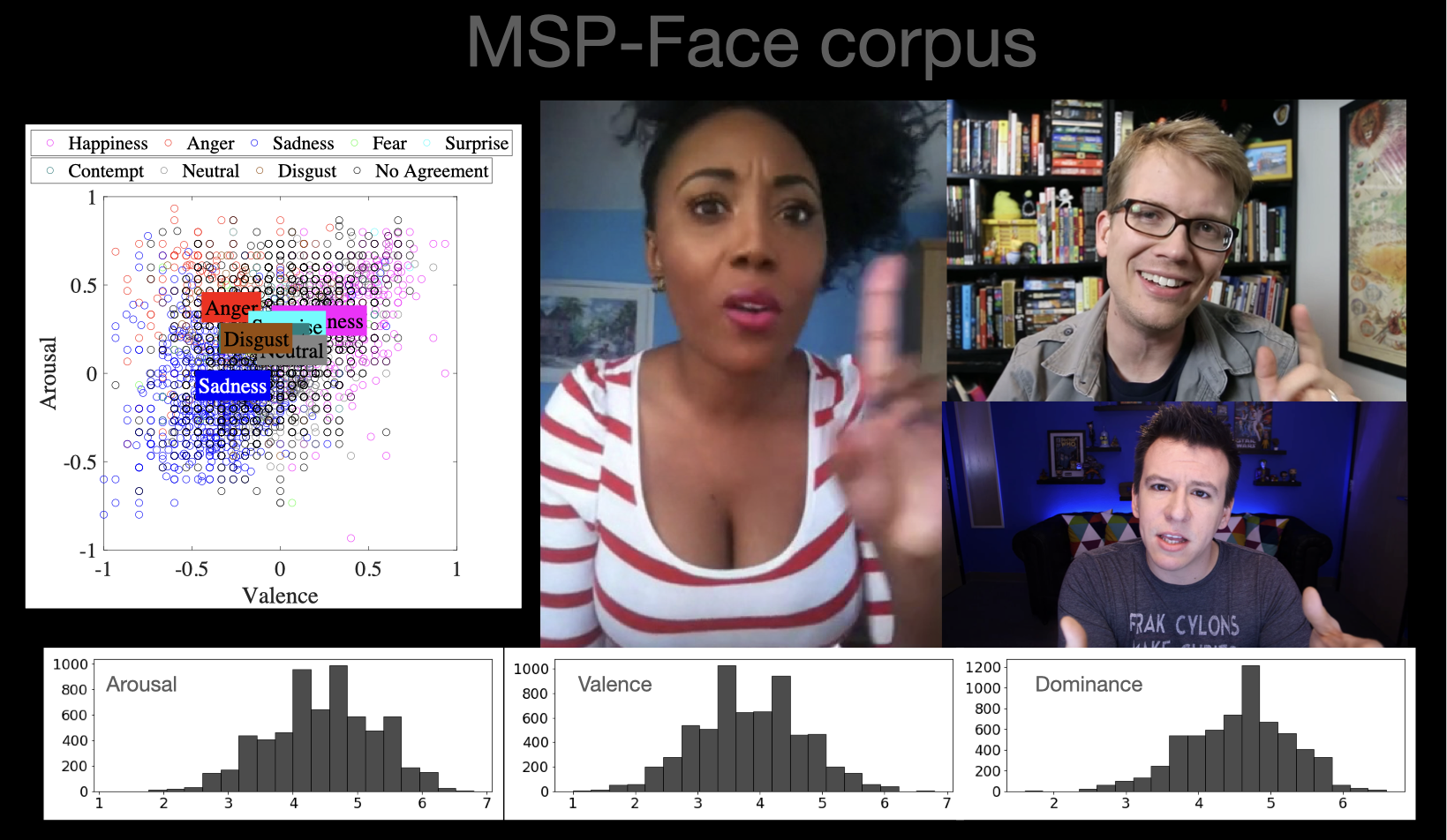

The MSP-Face database is a natural emotional multimodal corpus collected from video-sharing websites. The recordings include people in front of a camera speaking about different situations from their daily life, giving their opinions. The videos consist of natural and spontaneous recordings, where the emotions are not acted or artificially elicited. The data collection protocol is flexible and scalable, and addresses key limitations of existing multimodal databases. The data was collected from multiple participants, expressing a broad range of emotions, which is not easily achieved with other data collection protocols.

A feature of the corpus is the addition of two sets. The first set includes videos that have been annotated with emotional labels using a crowd-sourcing protocol 9,370 recordings (24 hrs, 41 mins). The second set includes similar videos without emotional labels, offering the perfect infrastructure to explore semi-supervised and unsupervised machine-learning algorithms on natural emotional videos. This set has 15,011 recordings (38 hrs, 3 mins).

|

This corpus is annotated with emotional labels using attribute-based descriptors (activation, dominance and valence) and categorical labels (anger, happiness, sadness, disgust, surprised, fear, contempt, neutral and other). With over 62 hrs of labeled and unlabeled data, this corpus provides an important resource that complements and extends existing emotional databases. The applications that we have in mind include emotion recognition from videos, and visual agents with expressive behaviors.

The MSP-Face corpus is being recorded as part of our NSF project "RI: Small: Integrative, Semantic-Aware, Speech-Driven Models for Believable Conversational Agents with Meaningful Behaviors'" (NSF IIS: 1718944). For further information on the corpus, please read:

- Andrea Vidal, Ali Salman, Wei-Cheng Lin, and Carlos Busso, "MSP-face corpus: A natural audiovisual emotional database," in ACM International Conference on Multimodal Interaction (ICMI 2020), Utrecht, The Netherlands, October 2020, pp. 397-405. [soon cited] [pdf] [bib] [slides]

Release of the Corpus: Academic License

We are sharing this corpus with the research community. We provide the link to the videos and the code to segment the videos into the speaking turns used in the perceptual evaluation to annotate the emotional labels. This is the link to download the corpus: GitHub

Some of our Publications using this Corpus:

- Andrea Vidal, Ali Salman, Wei-Cheng Lin, and Carlos Busso, "MSP-face corpus: A natural audiovisual emotional database," in ACM International Conference on Multimodal Interaction (ICMI 2020), Utrecht, The Netherlands, October 2020, pp. 397-405. [soon cited] [pdf] [bib] [slides]

This material is based upon work supported by the National Science Foundation under Grant IIS-1453781. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Copyright Notice: This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.