Multimodal Driver Monitoring (MDM) corpus:

A Naturalistic Corpus to Study Driver Attention

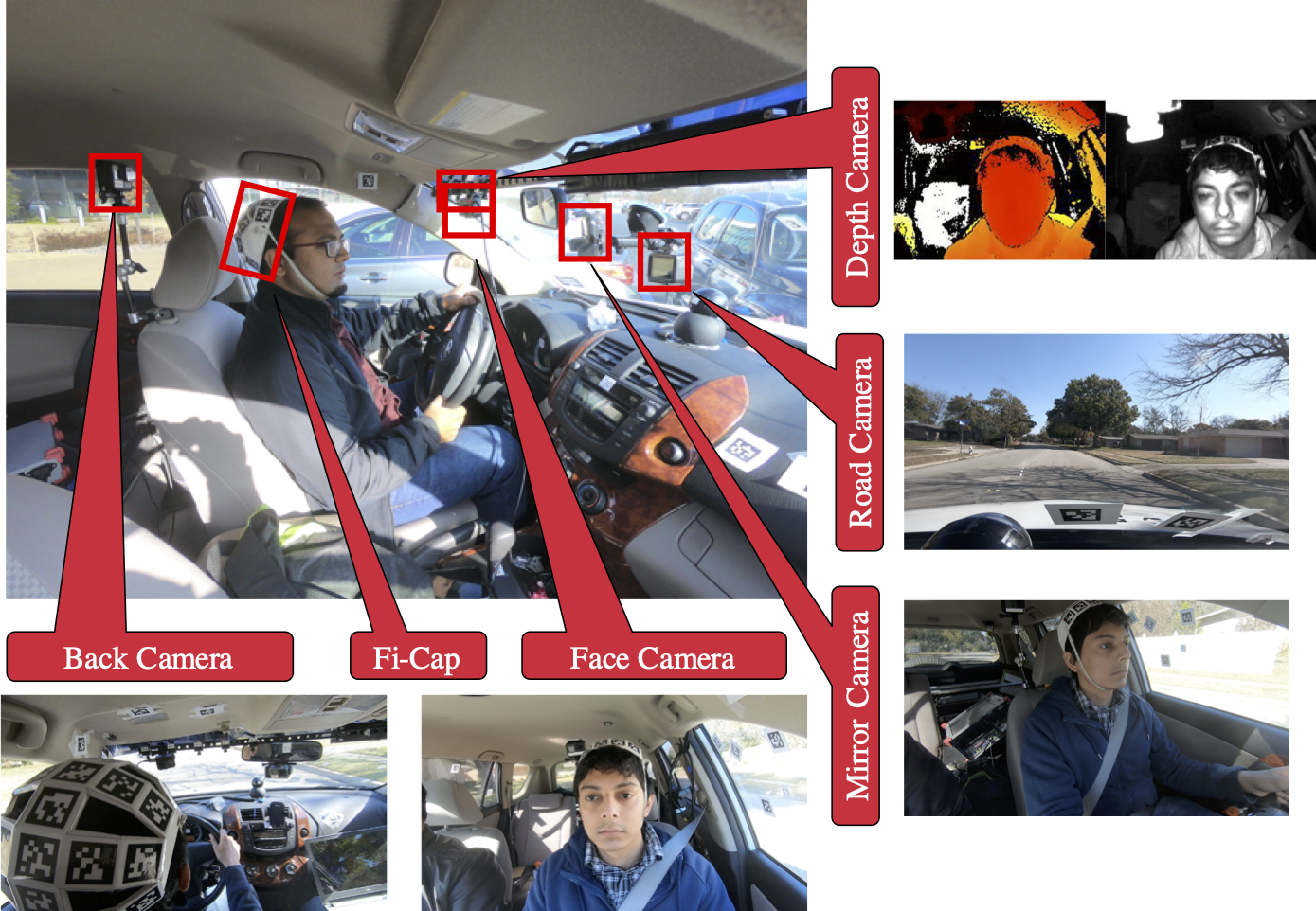

We collected the MDM dataset to study driver attention and facilitate the design of models for head pose and gaze estimation. Multimodal sensors are used to collect this database, while drivers perform various tasks.

|

We present the multimodal driver monitoring (MDM) database, which is specifically designed to study the driver's visual attention. It addresses some of the challenges of existing driver monitoring datasets, including their limited size and narrow scope focusing solely on either the driver's head pose or the driver's gaze zones. The MDM corpus is a new multimodal dataset that considers both head pose and gaze direction to facilitate learning this complex interplay between the head pose, eye movements and the resulting gaze. We achieve this goal by providing frame-based information about head pose, and spatial positions for target markers inside and outside the vehicle that were glanced at by the drivers. We have collected 59 different gender-balanced subjects spanning different ethnic groups.

The corpus is multimodal, where we use multiple RGB cameras and depth videos to record the drivers.

Fi-Cap

Our solution for estimating the frame-based labels for the head pose is based on fiducial markers placed on a solid helmet with a predefined structure with highly contrasting black and white patterns. The position and orientation of multiple fiducial points are easily tracked with simple algorithms, which are then used to estimate the head pose of the drivers.

RGB Cameras

We use four GoPro Hero 6 Black cameras in our data collection. All the cameras are set to record in full HD (1,920 x 1,080) in linear mode. The purpose and placement of each of the four cameras are listed as follows:

- Face Camera - This camera is placed in front of the driver to record the frontal view of the driver's face.

- Road Camera - This camera is placed in the center of the dashboard facing the road. This camera captures road related information.

- Mirror Camera - This camera is placed under the rearview mirror to obtain a profile view of the driver's face.

- Back Camera - This camera is placed behind the driver to record the Fi-Cap helmet.

Depth Camera

We place a camboard picoflex camera close to the face camera. This camera records the point cloud data using time-of-flight technology, providing robust estimation of the depth map in varying illumination conditions. This camera provides reliable information even during night-time. The camera also records grayscale images which are illuminated using IR LEDs.

CAN-Bus

The UTDrive vehicle records the CAN-Bus information during the recordings. From the CAN-Bus, we obtain the information about accelerator, brakes, steering and speed of the vehicle. The UTDrive vehicle also has a gas and brake pressure sensors.

Microphone Array

The UTDrive vehicle is also equipped with a microphone array with five microphones. While our protocol does not include any task that elicits speech, the audio information is useful for understanding potential auditory distractions.

Dewetron

The CAN-Bus data and the microphone array are connected to a Dewetron system, which stores and synchronizes the modalities.

We ask the drivers to perform multiple tasks while being at the driver seat in the UTDrive car.

- Looking at predefined markers inside the car in a parked car.

- Looking at a continuous moving marker outside the car.

- Quick glances at predefined markers inside the car when driving.

- Looking at landmarks on the street when driving.

- Following navigation on phone while driving.

- Operating the radio while driving.

- Naturalistic driving without any secondary task.

The MSP-Podcast corpus is being recorded as part of our NSF project "CCRI: New: Creating the largest speech emotional database by leveraging existing naturalistic recordings" (NSF CNS: 2016719). For further information on the corpus, please read:

- Sumit Jha, Mohamed F. Marzban, Tiancheng Hu, Mohamed H. Mahmoud, Naofal Al-Dhahir and Carlos Busso "The multimodal driver monitoring database: A naturalistic corpus to study driver attention," ArXiv e-prints 2101.04639, pp. 1-14, December 2020. [pdf] [cited] [bib]

Release of the Corpus:

The multimodal driver monitoring dataset is licensed free of cost to academic institutions under a Federal Demonstration Partnership (FDP) Data Transfer and Use Agreement. We have also established a licensing program for commercial entities interested in our corpus. We will provide the details soon. If you have more information, plese contact Prof. Carlos Busso - ![]()

Some of our Publications using this Corpus:

- Tiancheng Hu, Sumit Jha, and Carlos Busso, "Temporal head pose estimation from point cloud in naturalistic driving conditions," IEEE Transactions on Intelligent Transportation Systems, vol. accepted, 2021. [soon cited] [pdf] [bib]

- Sumit Jha, Mohamed F. Marzban, Tiancheng Hu, Mohamed H. Mahmoud, Naofal Al-Dhahir and Carlos Busso "The multimodal driver monitoring database: A naturalistic corpus to study driver attention," ArXiv e-prints 2101.04639, pp. 1-14, December 2020. [pdf] [cited] [bib]

- Tiancheng Hu, Sumit Jha, and Carlos Busso, "Robust driver head pose estimation in naturalistic conditions from point-cloud data," in IEEE Intelligent Vehicles Symposium (IV2020), Las Vegas, NV USA, October 2020. [pdf] [cited] [bib] [slides]

This work was supported by SRC / Texas Analog Center of Excellence (TxACE), under task 2810.014. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the sponsor agencies.

Copyright Notice: This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.