Research at MSP

Multimodal Interfaces

Multimodal interfaces can play an important role in tracking verbal and nonverbal information from the user. The challenge for the research community is to design practical algorithms that can be easily integrate with current systems. Following this direction, we are interesting in:

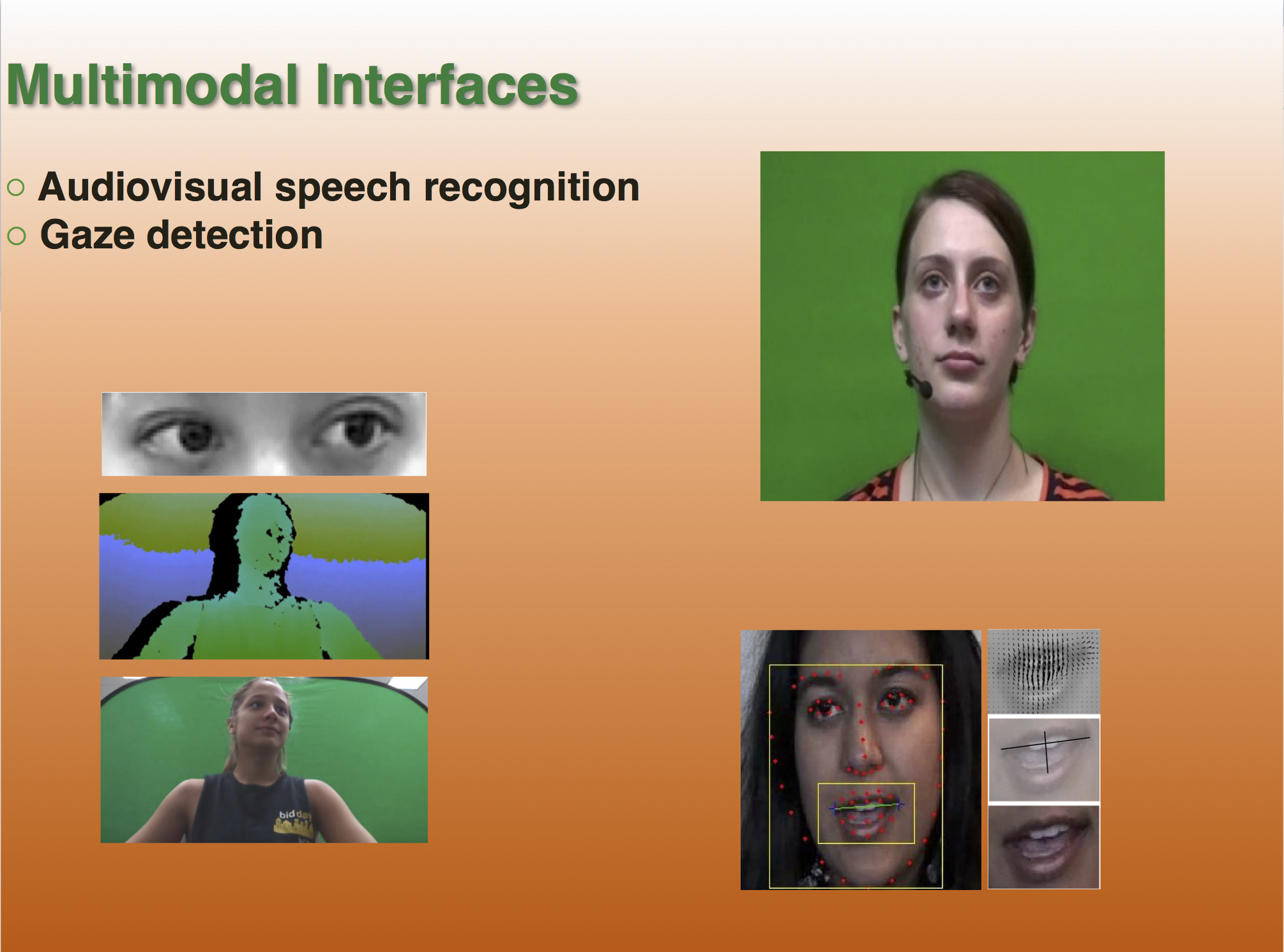

Gaze detection: designing user-independent gaze tracking systems that do not require calibration, or any cooperation from the user. These gaze tracking system can facilitate visual attention modeling, improving the design of the interface.

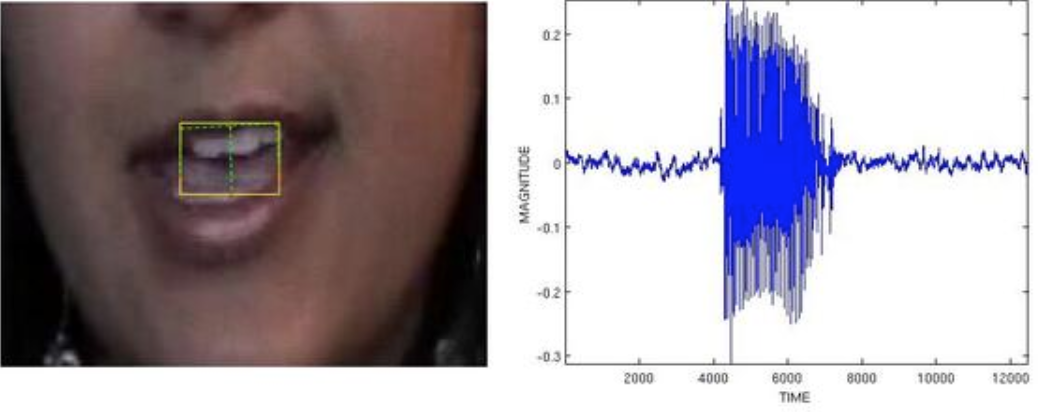

Audiovisual speech recognition: the use of lipreading techniques can improve the performance of automatic speech recognition systems in presence of environment noise and in presence of challenging speech modes such as whisper speech.

|

|

Selected Publications:

- Fei Tao and Carlos Busso, "Bimodal recurrent neural network for audiovisual voice activity detection," Interspeech 2017, Stockholm, Sweden, August 2017, pp. 1938-1942. [soon cited] [pdf] [bib] [poster]

- Fei Tao, John H.L. Hansen, and Carlos Busso, "Improving boundary estimation in audiovisual speech activity detection using Bayesian information criterion," in Interspeech 2016, San Francisco, CA, USA, September 2016, pp. 2130-2134. [pdf] [cited] [bib] [slides]

- Fei Tao, John H.L. Hansen, and Carlos Busso, "An unsupervised visual-only voice activity detection approach using temporal orofacial features," in Interspeech 2015, Dresden, Germany, September 2015, pp. 2302-2306 [pdf] [cited] [bib] [slides]

- Fei Tao and Carlos Busso, "Lipreading approach for isolated digits recognition under whisper and neutral speech," in Interspeech 2014, Singapore, September 2014. [soon pdf][soon cited] [bib]

- Nanxiang Li and Carlos Busso, "User-independent gaze estimation by exploiting similarity measures in the eye pair appearance eigenspace," in International conference on multimodal interaction (ICMI 2014), Istanbul, Turkey, November 2014, pp. 335-338. [pdf] [cited] [bib] [slides]

- Nanxiang Li and Carlos Busso, "Evaluating the robustness of an appearance-based gaze estimation method for multimodal interfaces," in International conference on multimodal interaction (ICMI 2013), Sydney, Australia, December 2013, pp. 91-98. [pdf] [soon cited] [bib] [poster]

- Tam Tran, Soroosh Mariooryad, and Carlos Busso, "Audiovisual corpus to analyze whisper speech," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2013), Vancouver, BC, Canada, May 2013. [pdf] [soon cited] [bib] [poster]

- Xing Fan, Carlos Busso, and John H.L. Hansen, "Audio-visual isolated digit recognition for whispered speech," in European Signal Processing Conference (EUSIPCO-2011), Barcelona, Spain, August-September 2011. [pdf] [cited] [bib] [slides]

- Carlos Busso, Panayiotis G. Georgiou, and Shrikanth S. Narayanan, "Real-time monitoring of participants interaction in a meeting using audio-visual sensors," in International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2007), vol. 2, Honolulu, HI, USA, April 2007, pp. 685-688. [pdf] [cited] [bib] [slides]

|

|

|

|